It’s hard to believe you’d have an economy at all if you gave pink slips to more than half the labor force. But that—in slow motion—is what the industrial revolution did to the workforce of the early 19th century. Two hundred years ago, 70 percent of American workers lived on the farm. Today automation has eliminated all but 1 percent of their jobs, replacing them (and their work animals) with machines. But the displaced workers did not sit idle. Instead, automation created hundreds of millions of jobs in entirely new fields. Those who once farmed were now manning the legions of factories that churned out farm equipment, cars, and other industrial products. Since then, wave upon wave of new occupations have arrived—appliance repairman, offset printer, food chemist, photographer, web designer—each building on previous automation. Today, the vast majority of us are doing jobs that no farmer from the 1800s could have imagined.

It may be hard to believe, but before the end of this century, 70 percent of today’s occupations will likewise be replaced by automation. Yes, dear reader, even you will have your job taken away by machines. In other words, robot replacement is just a matter of time. This upheaval is being led by a second wave of automation, one that is centered on artificial cognition, cheap sensors, machine learning, and distributed smarts. This deep automation will touch all jobs, from manual labor to knowledge work.

First, machines will consolidate their gains in already-automated industries. After robots finish replacing assembly line workers, they will replace the workers in warehouses. Speedy bots able to lift 150 pounds all day long will retrieve boxes, sort them, and load them onto trucks. Fruit and vegetable picking will continue to be robotized until no humans pick outside of specialty farms. Pharmacies will feature a single pill-dispensing robot in the back while the pharmacists focus on patient consulting. Next, the more dexterous chores of cleaning in offices and schools will be taken over by late-night robots, starting with easy-to-do floors and windows and eventually getting to toilets. The highway legs of long-haul trucking routes will be driven by robots embedded in truck cabs.

All the while, robots will continue their migration into white-collar work. We already have artificial intelligence in many of our machines; we just don’t call it that. Witness one piece of software by Narrative Science (profiled in issue 20.05) that can write newspaper stories about sports games directly from the games’ stats or generate a synopsis of a company’s stock performance each day from bits of text around the web. Any job dealing with reams of paperwork will be taken over by bots, including much of medicine. Even those areas of medicine not defined by paperwork, such as surgery, are becoming increasingly robotic. The rote tasks of any information-intensive job can be automated. It doesn’t matter if you are a doctor, lawyer, architect, reporter, or even programmer: The robot takeover will be epic.

And it has already begun.

Baxter is an early example of a new class of industrial robots created to work alongside humans.

Here’s why we’re at the inflection point: Machines are acquiring smarts.

We have preconceptions about how an intelligent robot should look and act, and these can blind us to what is already happening around us. To demand that artificial intelligence be humanlike is the same flawed logic as demanding that artificial flying be birdlike, with flapping wings. Robots will think different. To see how far artificial intelligence has penetrated our lives, we need to shed the idea that they will be humanlike.

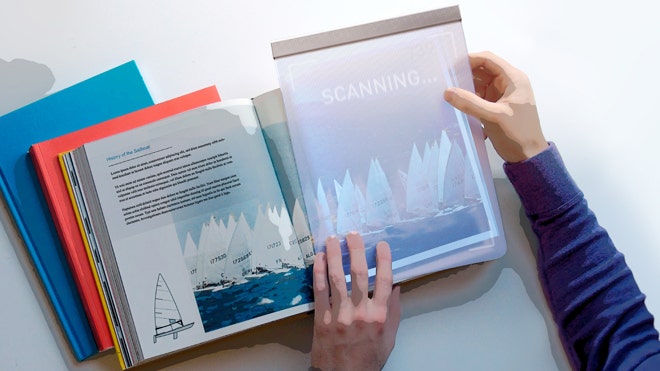

Consider Baxter, a revolutionary new workbot from Rethink Robotics. Designed by Rodney Brooks, the former MIT professor who invented the best-selling Roomba vacuum cleaner and its descendants, Baxter is an early example of a new class of industrial robots created to work alongside humans. Baxter does not look impressive. It’s got big strong arms and a flatscreen display like many industrial bots. And Baxter’s hands perform repetitive manual tasks, just as factory robots do. But it’s different in three significant ways.

First, it can look around and indicate where it is looking by shifting the cartoon eyes on its head. It can perceive humans working near it and avoid injuring them. And workers can see whether it sees them. Previous industrial robots couldn’t do this, which means that working robots have to be physically segregated from humans. The typical factory robot is imprisoned within a chain-link fence or caged in a glass case. They are simply too dangerous to be around, because they are oblivious to others. This isolation prevents such robots from working in a small shop, where isolation is not practical. Optimally, workers should be able to get materials to and from the robot or to tweak its controls by hand throughout the workday; isolation makes that difficult. Baxter, however, is aware. Using force-feedback technology to feel if it is colliding with a person or another bot, it is courteous. You can plug it into a wall socket in your garage and easily work right next to it.

Second, anyone can train Baxter. It is not as fast, strong, or precise as other industrial robots, but it is smarter. To train the bot you simply grab its arms and guide them in the correct motions and sequence. It’s a kind of “watch me do this” routine. Baxter learns the procedure and then repeats it. Any worker is capable of this show-and-tell; you don’t even have to be literate. Previous workbots required highly educated engineers and crack programmers to write thousands of lines of code (and then debug them) in order to instruct the robot in the simplest change of task. The code has to be loaded in batch mode, i.e., in large, infrequent batches, because the robot cannot be reprogrammed while it is being used. Turns out the real cost of the typical industrial robot is not its hardware but its operation. Industrial robots cost $100,000-plus to purchase but can require four times that amount over a lifespan to program, train, and maintain. The costs pile up until the average lifetime bill for an industrial robot is half a million dollars or more.

The third difference, then, is that Baxter is cheap. Priced at $22,000, it’s in a different league compared with the $500,000 total bill of its predecessors. It is as if those established robots, with their batch-mode programming, are the mainframe computers of the robot world, and Baxter is the first PC robot. It is likely to be dismissed as a hobbyist toy, missing key features like sub-millimeter precision, and not serious enough. But as with the PC, and unlike the mainframe, the user can interact with it directly, immediately, without waiting for experts to mediate—and use it for nonserious, even frivolous things. It’s cheap enough that small-time manufacturers can afford one to package up their wares or custom paint their product or run their 3-D printing machine. Or you could staff up a factory that makes iPhones.

Photo: Peter Yang

Baxter was invented in a century-old brick building near the Charles River in Boston. In 1895 the building was a manufacturing marvel in the very center of the new manufacturing world. It even generated its own electricity. For a hundred years the factories inside its walls changed the world around us. Now the capabilities of Baxter and the approaching cascade of superior robot workers spur Brooks to speculate on how these robots will shift manufacturing in a disruption greater than the last revolution. Looking out his office window at the former industrial neighborhood, he says, “Right now we think of manufacturing as happening in China. But as manufacturing costs sink because of robots, the costs of transportation become a far greater factor than the cost of production. Nearby will be cheap. So we’ll get this network of locally franchised factories, where most things will be made within 5 miles of where they are needed.”

That may be true of making stuff, but a lot of jobs left in the world for humans are service jobs. I ask Brooks to walk with me through a local McDonald’s and point out the jobs that his kind of robots can replace. He demurs and suggests it might be 30 years before robots will cook for us. “In a fast food place you’re not doing the same task very long. You’re always changing things on the fly, so you need special solutions. We are not trying to sell a specific solution. We are building a general-purpose machine that other workers can set up themselves and work alongside.” And once we can cowork with robots right next to us, it’s inevitable that our tasks will bleed together, and soon our old work will become theirs—and our new work will become something we can hardly imagine.

To understand how robot replacement will happen, it’s useful to break down our relationship with robots into four categories, as summed up in this chart:

The rows indicate whether robots will take over existing jobs or make new ones, and the columns indicate whether these jobs seem (at first) like jobs for humans or for machines.

Let’s begin with quadrant A: jobs humans can do but robots can do even better. Humans can weave cotton cloth with great effort, but automated looms make perfect cloth, by the mile, for a few cents. The only reason to buy handmade cloth today is because you want the imperfections humans introduce. We no longer value irregularities while traveling 70 miles per hour, though—so the fewer humans who touch our car as it is being made, the better.

And yet for more complicated chores, we still tend to believe computers and robots can’t be trusted. That’s why we’ve been slow to acknowledge how they’ve mastered some conceptual routines, in some cases even surpassing their mastery of physical routines. A computerized brain known as the autopilot can fly a 787 jet unaided, but irrationally we place human pilots in the cockpit to babysit the autopilot “just in case.” In the 1990s, computerized mortgage appraisals replaced human appraisers wholesale. Much tax preparation has gone to computers, as well as routine x-ray analysis and pretrial evidence-gathering—all once done by highly paid smart people. We’ve accepted utter reliability in robot manufacturing; soon we’ll accept it in robotic intelligence and service.

Next is quadrant B: jobs that humans can’t do but robots can. A trivial example: Humans have trouble making a single brass screw unassisted, but automation can produce a thousand exact ones per hour. Without automation, we could not make a single computer chip—a job that requires degrees of precision, control, and unwavering attention that our animal bodies don’t possess. Likewise no human, indeed no group of humans, no matter their education, can quickly search through all the web pages in the world to uncover the one page revealing the price of eggs in Katmandu yesterday. Every time you click on the search button you are employing a robot to do something we as a species are unable to do alone.

While the displacement of formerly human jobs gets all the headlines, the greatest benefits bestowed by robots and automation come from their occupation of jobs we are unable to do. We don’t have the attention span to inspect every square millimeter of every CAT scan looking for cancer cells. We don’t have the millisecond reflexes needed to inflate molten glass into the shape of a bottle. We don’t have an infallible memory to keep track of every pitch in Major League Baseball and calculate the probability of the next pitch in real time.

We aren’t giving “good jobs” to robots. Most of the time we are giving them jobs we could never do. Without them, these jobs would remain undone.

Now let’s consider quadrant C, the new jobs created by automation—including the jobs that we did not know we wanted done. This is the greatest genius of the robot takeover: With the assistance of robots and computerized intelligence, we already can do things we never imagined doing 150 years ago. We can remove a tumor in our gut through our navel, make a talking-picture video of our wedding, drive a cart on Mars, print a pattern on fabric that a friend mailed to us through the air. We are doing, and are sometimes paid for doing, a million new activities that would have dazzled and shocked the farmers of 1850. These new accomplishments are not merely chores that were difficult before. Rather they are dreams that are created chiefly by the capabilities of the machines that can do them. They are jobs the machines make up.

Before we invented automobiles, air-conditioning, flatscreen video displays, and animated cartoons, no one living in ancient Rome wished they could watch cartoons while riding to Athens in climate-controlled comfort. Two hundred years ago not a single citizen of Shanghai would have told you that they would buy a tiny slab that allowed them to talk to faraway friends before they would buy indoor plumbing. Crafty AIs embedded in first-person-shooter games have given millions of teenage boys the urge, the need, to become professional game designers—a dream that no boy in Victorian times ever had. In a very real way our inventions assign us our jobs. Each successful bit of automation generates new occupations—occupations we would not have fantasized about without the prompting of the automation.

To reiterate, the bulk of new tasks created by automation are tasks only other automation can handle. Now that we have search engines like Google, we set the servant upon a thousand new errands. Google, can you tell me where my phone is? Google, can you match the people suffering depression with the doctors selling pills? Google, can you predict when the next viral epidemic will erupt? Technology is indiscriminate this way, piling up possibilities and options for both humans and machines.

It is a safe bet that the highest-earning professions in the year 2050 will depend on automations and machines that have not been invented yet. That is, we can’t see these jobs from here, because we can’t yet see the machines and technologies that will make them possible. Robots create jobs that we did not even know we wanted done.

Photo: Peter Yang

Finally, that leaves us with quadrant D, the jobs that only humans can do—at first. The one thing humans can do that robots can’t (at least for a long while) is to decide what it is that humans want to do. This is not a trivial trick; our desires are inspired by our previous inventions, making this a circular question.

When robots and automation do our most basic work, making it relatively easy for us to be fed, clothed, and sheltered, then we are free to ask, “What are humans for?” Industrialization did more than just extend the average human lifespan. It led a greater percentage of the population to decide that humans were meant to be ballerinas, full-time musicians, mathematicians, athletes, fashion designers, yoga masters, fan-fiction authors, and folks with one-of-a kind titles on their business cards. With the help of our machines, we could take up these roles; but of course, over time, the machines will do these as well. We’ll then be empowered to dream up yet more answers to the question “What should we do?” It will be many generations before a robot can answer that.

This postindustrial economy will keep expanding, even though most of the work is done by bots, because part of your task tomorrow will be to find, make, and complete new things to do, new things that will later become repetitive jobs for the robots. In the coming years robot-driven cars and trucks will become ubiquitous; this automation will spawn the new human occupation of trip optimizer, a person who tweaks the traffic system for optimal energy and time usage. Routine robo-surgery will necessitate the new skills of keeping machines sterile. When automatic self-tracking of all your activities becomes the normal thing to do, a new breed of professional analysts will arise to help you make sense of the data. And of course we will need a whole army of robot nannies, dedicated to keeping your personal bots up and running. Each of these new vocations will in turn be taken over by robots later.

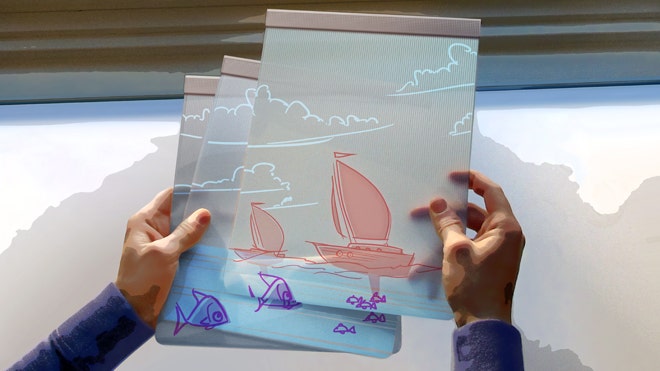

The real revolution erupts when everyone has personal workbots, the descendants of Baxter, at their beck and call. Imagine you run a small organic farm. Your fleet of worker bots do all the weeding, pest control, and harvesting of produce, as directed by an overseer bot, embodied by a mesh of probes in the soil. One day your task might be to research which variety of heirloom tomato to plant; the next day it might be to update your custom labels. The bots perform everything else that can be measured.

Right now it seems unthinkable: We can’t imagine a bot that can assemble a stack of ingredients into a gift or manufacture spare parts for our lawn mower or fabricate materials for our new kitchen. We can’t imagine our nephews and nieces running a dozen workbots in their garage, churning out inverters for their friend’s electric-vehicle startup. We can’t imagine our children becoming appliance designers, making custom batches of liquid-nitrogen dessert machines to sell to the millionaires in China. But that’s what personal robot automation will enable.

Everyone will have access to a personal robot, but simply owning one will not guarantee success. Rather, success will go to those who innovate in the organization, optimization, and customization of the process of getting work done with bots and machines. Geographical clusters of production will matter, not for any differential in labor costs but because of the differential in human expertise. It’s human-robot symbiosis. Our human assignment will be to keep making jobs for robots—and that is a task that will never be finished. So we will always have at least that one “job.”

In the coming years our relationships with robots will become ever more complex. But already a recurring pattern is emerging. No matter what your current job or your salary, you will progress through these Seven Stages of Robot Replacement, again and again:

1. A robot/computer cannot possibly do the tasks I do.

[Later.]

2. OK, it can do a lot of them, but it can’t do everything I do.

[Later.]

3. OK, it can do everything I do, except it needs me when it breaks down, which is often.

[Later.]

4. OK, it operates flawlessly on routine stuff, but I need to train it for new tasks.

[Later.]

5. OK, it can have my old boring job, because it’s obvious that was not a job that humans were meant to do.

[Later.]

6. Wow, now that robots are doing my old job, my new job is much more fun and pays more!

[Later.]

7. I am so glad a robot/computer cannot possibly do what I do now.

This is not a race against the machines. If we race against them, we lose. This is a race with the machines. You’ll be paid in the future based on how well you work with robots. Ninety percent of your coworkers will be unseen machines. Most of what you do will not be possible without them. And there will be a blurry line between what you do and what they do. You might no longer think of it as a job, at least at first, because anything that seems like drudgery will be done by robots.

We need to let robots take over. They will do jobs we have been doing, and do them much better than we can. They will do jobs we can’t do at all. They will do jobs we never imagined even needed to be done. And they will help us discover new jobs for ourselves, new tasks that expand who we are. They will let us focus on becoming more human than we were.

Let the robots take the jobs, and let them help us dream up new work that matters.

fu

fu